When you want to introduce cloud development environments (CDEs) to your organization, you have to decide between building or buying, and self-managing or self-hosting. These decisions are more than just technical, they impact every aspect of your development and operational efficiency. So whether you are managing a team of developers or overseeing the infrastructure of a large organization, understanding the nuances of these decisions, especially self-hosting or self-managing, is crucial for your future strategies.

In December of 2022, we discontinued our self-managed offering. After three years of trying to make it work we realized that CDE platforms benefit from not being self-managed. While they can be self-hosted, self-management makes them less effective and more costly. In this article I’ll explain why self-managed CDEs do not offer the experience we wanted to provide to our customers - and what an alternative can look like.

These are hard-won lessons. Giving up on self-managed meant forfeiting seven figures of net-new revenue and eventually led to us laying off 28% of Gitpodders beginning of 2023. Those were never easy decisions. The alternative we created with Gitpod Dedicated, which is still self-hosted but not self-managed, is so much better - and we have the customers to prove it.

CDEs are mission critical

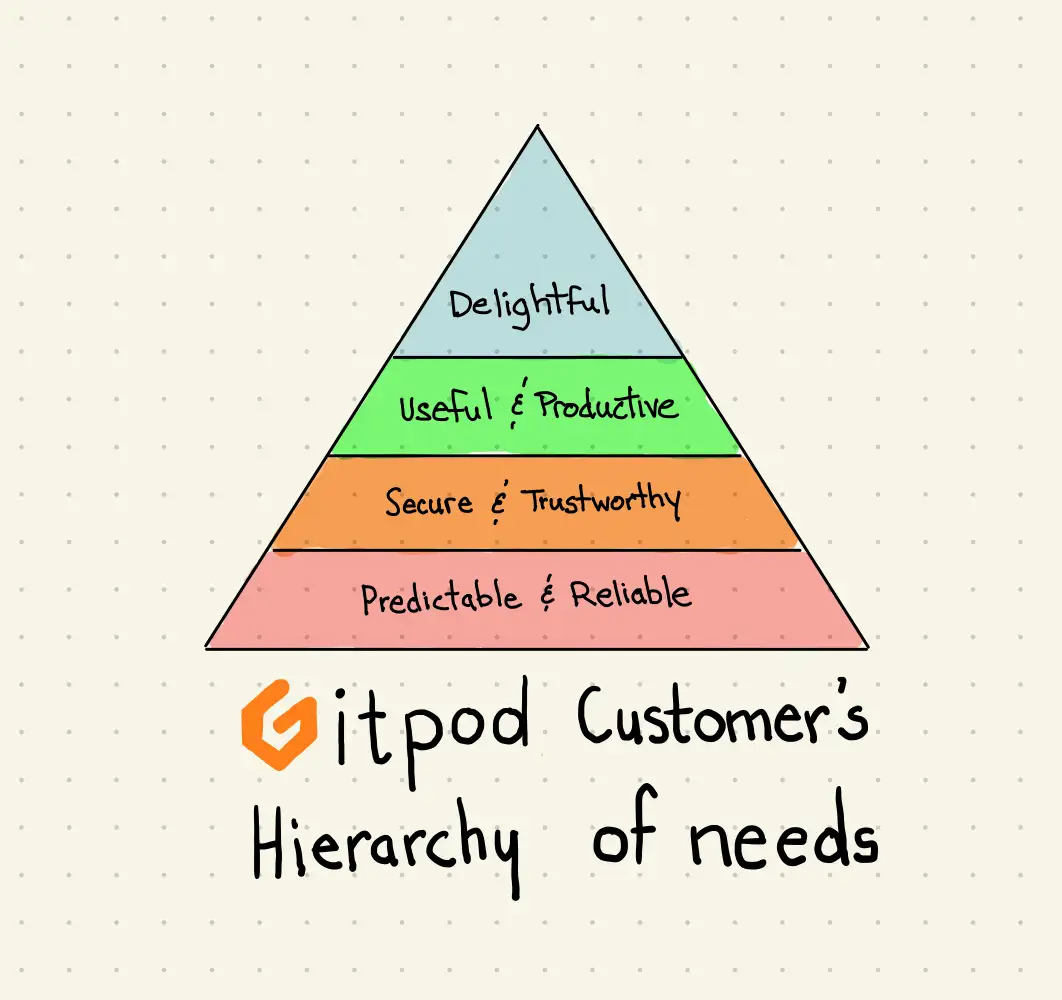

Cloud Development Environments are mission critical software. They are the place in which developers write software, review their work and use the tooling required to be ready to code. When your CDE service is down your engineers cannot work - every minute of downtime is very expensive and frustrating. Therefore, reliability is fundamental for any CDE service. This basic need is well understood by organizations adopting CDEs and often drives their desire to self-manage their CDE installations.

Tight vertical integration is crucial to deliver an experience that adheres to the CDE principles (Equitable, On-Demand, Extensible, Consistent), while meeting basic needs. Hence, full control over the technology stack that powers a cloud development environment is a prerequisite. Any flaw in integrating the various components of a CDE can undermine reliability and security. Consider, for example, workspace content: there’s little more excruciating than losing your work after hours of intense concentration. Any CDE must ensure that your changes are not lost because of state handling bugs or node failures - something which requires significant influence over the underlying infrastructure.

CDEs aren’t merely better VDIs or VMs moved to the cloud. They represent a foundational shift in how we develop software, how we collaborate and what expectations we have towards our development environments. We want to build the best experience possible, and not compromise on the lowest common infrastructure denominator.

Until 2023, Gitpod offered a product inaccurately labeled as “Gitpod Self-Hosted”. At the time, we didn’t understand the difference between self-hosted and self-managed. Self-hosted implies that the service runs on your infrastructure, such as an AWS account, and aids in network integration and compliance. In contrast, self-managed means taking full operational responsibility for the service. The distinction has become increasingly relevant with the growing popularity of Software as a Service (SaaS) offerings.

Self-managing CDEs seems intuitive, as it aligns with decades of engineering ‘best practice’. Engineers are accustomed to maintaining their own development environments and adjacent services, like Continuous Integration. However, running a service close to home differs significantly from managing it yourself. Managing a service, i.e. assuming responsibility for it means overseeing every aspect: components, data storage methods, computing resources, and network setup. In cases of downtime, the burden falls on the operator who is then called out of bed. This level of control introduces demands to CDEs that can conflict with the vertical integration required to provide a great user experience.

Installing self-managed CDEs is hard

Any CDE provider integrates a number of different services and functions. Consider storage: whenever a workspace stops, its content needs to be backed up, often in object storage like S3. Chances are your organization already runs its own storage service, maybe MinIO. Wouldn’t it make sense if the CDE service you want to operate reused that existing MinIO installation? This preference extends to other CDE components. Operating yet another database, no thank you - we already got one over here. Docker registry? Our security team says we have to use Artifactory. Kubernetes? That would be GKE on 1.25, thank you very much. As the team who’s responsible for making sure the most foundational need –reliability – is met, it’s just sensible to rely on existing infrastructure. This also adds a complex set of requirements to any CDE of your choice: it has to support all these existing technologies and systems.

In larger organizations, chances are these existing infrastructure systems are managed by teams of their own. There’s the Kubernetes team, the networking team, the S3 team, and the database team. Coordinating with these teams for self-managing a CDE platform can be a complex task. You’ll need to make sure that what those teams offer aligns with the requirements and capabilities of the CDE software you intend to run - and if not, need time from those teams to ensure they do. We have seen this process take weeks, sometimes months. Self-managing a CDE in the real world can be a complex and time consuming endeavor of organizational navigation.

Kubernetes is the hardest part of this technology stack. Few organizations have a team which truly understands Kubernetes in depth - most, sensibly, focus on operational concerns and methodology. For CDEs that use Kubernetes, like Gitpod, deep integration is essential to deliver expected security and performance. Point in case: dynamic resource allocation. Gitpod has supported resource overbooking for many years, but only recently has this capability found its way into Kubernetes. To make this happen we had to break the abstractions Kubernetes provides and interface with the container runtime and Linux cgroups directly. The same is true for security boundaries, network bandwidth control, IOPS and storage quotas. Such deep vertical integration imposes specific requirements on the container runtime (e.g. has to be containerd ≥ 1.6), Linux kernel version or filesystems, which are unusual for typical Kubernetes-focused teams, but not for CDEs.

While the consumption of Kubernetes software has improved, with increased skill and knowledge among teams and UX enhancements within the community, managing complex Kubernetes software remains a significant challenge. This has led to entire companies being built to attempt to solve this problem.

Frankensteining software

The inherent need to adapt CDEs to pre-existing infrastructure and an innate desire for integration within an existing ecosystem often results in a situation we’ve come to call “frankensteining”. For CDE vendors, accommodating the diverse ways of integrating with various infrastructures is a challenging task. As a CDE customer, the desire for deeper integration with the CDE platform frequently outpaces what the platforms currently support. This is especially true for customers managing and owning the software. Naturally additional means for authentication using internal APIs are added, new custom features are built on assumptions which break with the next update. That clandestine CDE installation becomes a frankenstein implementation that is hard to update, difficult to operate and near impossible to support.

Operating self-managed CDEs is bad for your sleep

Now, you’ve managed to piece together all the required bits of infrastructure. You’ve gone through the installation and are finally up and running. Developers in your organization love their CDEs: no more “works on my machine” issues, onboarding time is virtually non-existent - as are context switching costs - and you got brownie-points from your security team because endpoint security is less of a concern. In short, the CDE platform you now operate is becoming mission critical.

Much like any mission critical software, maintaining operational excellence is crucial. Observability forms the cornerstone of your ability to guarantee the most foundational need: reliability. Much like the other infrastructure building blocks you probably already have an observability stack in place - and if not you’ll need one now. CDEs sport a myriad of failure modes, due to their dynamism (starting and stopping workloads several hundreds of times a day) and variance (developers run a variety of different applications with different load profiles). Once you’ve integrated the multitude of metrics, imported or built your own dashboards, understood and set up the alerts and hooked it all up to your PagerDuty installation, you can finally sleep well again - until PagerDuty ends your slumber of course.

Every month or so a new update will be available. Considering that CDEs are mission critical, updates must cause no downtime - expected or unexpected. Kubernetes simplifies this process but isn’t a complete solution. Successful updates require cooperation between your team and the CDE vendor to ensure tools and methods, like blue/green deployments or canary releases, are in place for smooth transitions. Ensuring continuous operation of all workspaces, preserving ongoing work, and maintaining functionality of integrations like SCM, SSO, Docker registries, package repositions, can be stressful. Better do this on a Sunday morning.

Frankensteined installations make updates a gamble and these custom configurations deviate significantly from the standard setups tested by CDE vendors rendering them unhelpful. The uncertainty of whether your customizations will remain functional post-update adds to the challenge.

The operational risk and effort of updates incentivizes slow and few updates of your CDE installation. Consequently, staying up to date with security fixes, and offering the latest features your developers crave for is hard. As CDE vendor product cycles become longer, feedback becomes less. It becomes harder for them to provide the best possible experience. This creates a lose-lose situation.

Self-Hosted, but not self-managed

There’s a middle way that balances customers’ desire for control and integration with the advantage of not having to manage everything themselves: the distinction between self-hosting and self-managing.

In November 2022, during an offsite in beautiful Sonoma County we made the decision to end our self-managed offering, previously mislabeled as “self-hosted”, in favor of what we now call Gitpod Dedicated. This was not an easy decision with very direct and tangible consequences. However, years of providing a self-managed CDE offering led us to believe that there must be a better way: self-hosted, but vendor-managed.

“Vendor-managed” solutions have become a standard way to consume software, most commonly referred to as Software as a Service (SaaS). SaaS offers several compelling benefits:

It eliminates the need for you to operate the software, as you can purchase a Service Level Agreement (SLA) directly from the vendor, freeing your resources and ensuring someone else is called out of bed when things go sideways.

It requires minimal upfront investment; you simply sign up and start using the product.

It removes the burden of updating the software, as you automatically receive updates and new features.

The quality and features of the software improve rapidly, as vendors can execute quicker feedback loops and product development cycles in a SaaS model.

More and more companies aim to bring these benefits to a self-hosted model. Database vendors like PlanetScale, data service providers like Databricks or Snowflake, and other developer tooling companies have pioneered this approach. For Cloud Development Environments, this model is nearly ideal, offering a practical balance between control and enjoying convenience.

Self-Hosted, vendor managed CDEs: Gitpod Dedicated

Let’s look at the Gitpod Dedicated architecture to understand how such a self-hosted, vendor managed CDE can look like. First, we need to distinguish between infrastructure and application.

Infrastructure management

All infrastructure is installed using a vendor-supplied CloudFormation template in less than 20 minutes. This template orchestrates the creation of things like a VPC, subnets, load balancer, EC2 auto-scaling groups, an RDS database, and crucially, all IAM roles and permissions.

The customer applies and updates this CloudFormation template, ensuring they retain complete control over their infrastructure.

Application management

The Gitpod application, operating on this infrastructure, is installed and updated through a set of Lambdas deployed as part of the overall infrastructure.

These Lambdas connect to a control plane responsible for orchestrating updates and handling operational aspects like telemetry, alerting and log introspection.

A pivotal aspect of this model is the meticulous separation of permissions, allowing customers to maintain authority over their infrastructure while offloading the operational burden of the software.

Early Adoption

Gitpod Dedicated was developed in close collaboration with early adopter customers, ensuring its architecture would meet the stringent security and architecture requirements of diverse companies. Gitpod Dedicated has since been reviewed - and passed - the most stringent security reviews:

Forward-looking industry leaders like Dynatrace and Quizlet have since adopted Gitpod Dedicated, praising its ease of installation and low operational overhead.

Large financial institutions adopted CDEs by virtue of the control afforded by the Dedicated architecture.

Several customers have migrated from our previous self-managed offering to Gitpod Dedicated. All reported reduced operational effort and some saw up to 93% faster workspace startup times.

We have time and time again seen how simple vendor-managed CDEs are to install. Throughout the many Gitpod Dedicated installations, it’s become clear that reducing the variance of how a product is installed makes the product more reliable and easier to use.

We’re always looking to learn more about how to make the experience of using CDEs optimal while meeting any and all security and architecture requirements that organizations have.

You can trial Gitpod Dedicated today and drop us feedback on what your experience was like.