Let’s build a cloud development environment

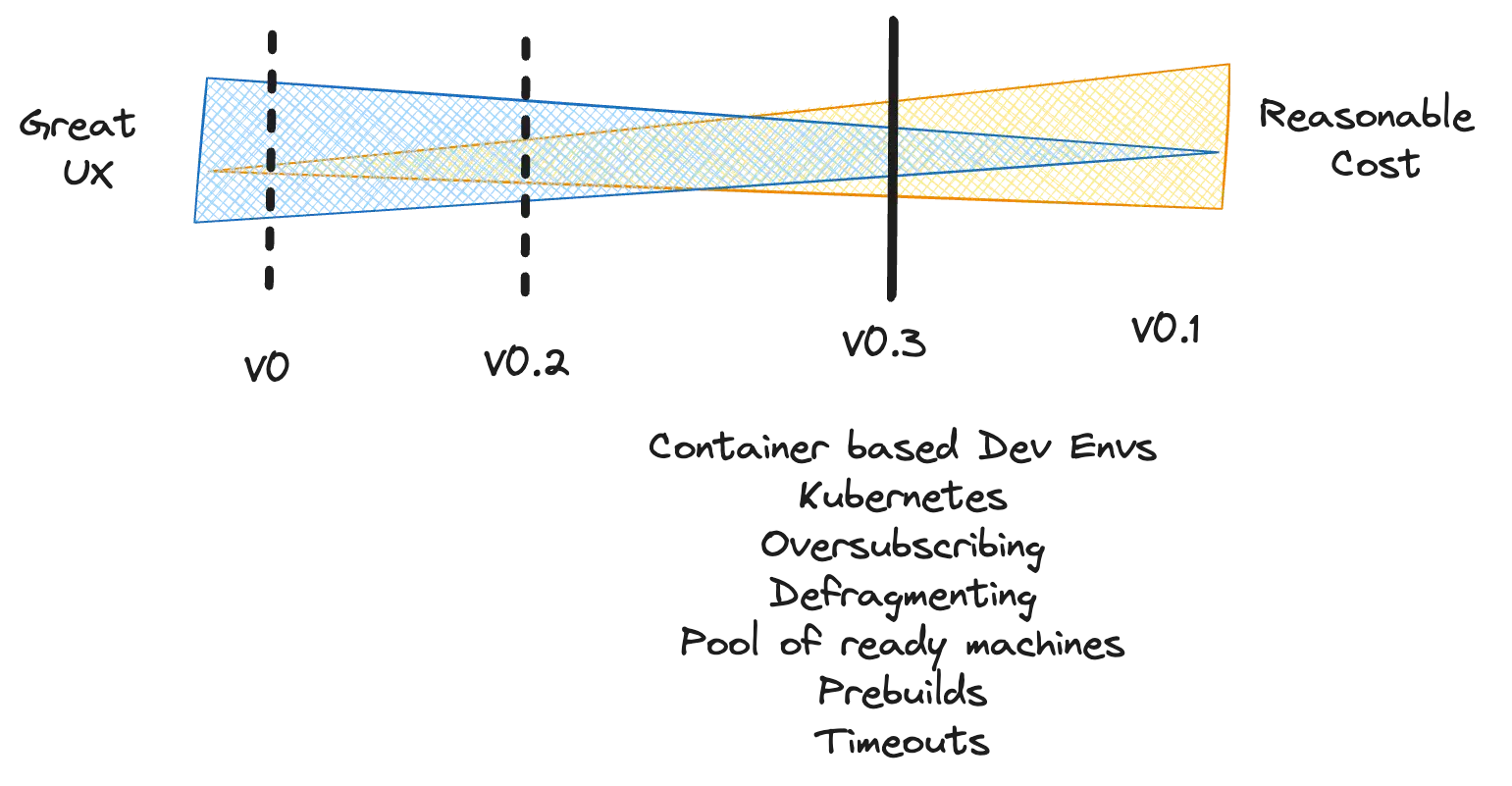

Cloud Development Environments (CDEs) offer significant improvements to developer experience and productivity, as well as security by centralizing source code access. Given how close they are to a company's IP and core operations, for some companies and industries it is sensible to consider running them within the company network and build them in-house. But how do you get started, and what are the likely challenges that you will face? This blog post takes you on a mental journey of turning a simple solution into something that works in practice and strikes the right balance between cost and user experience.

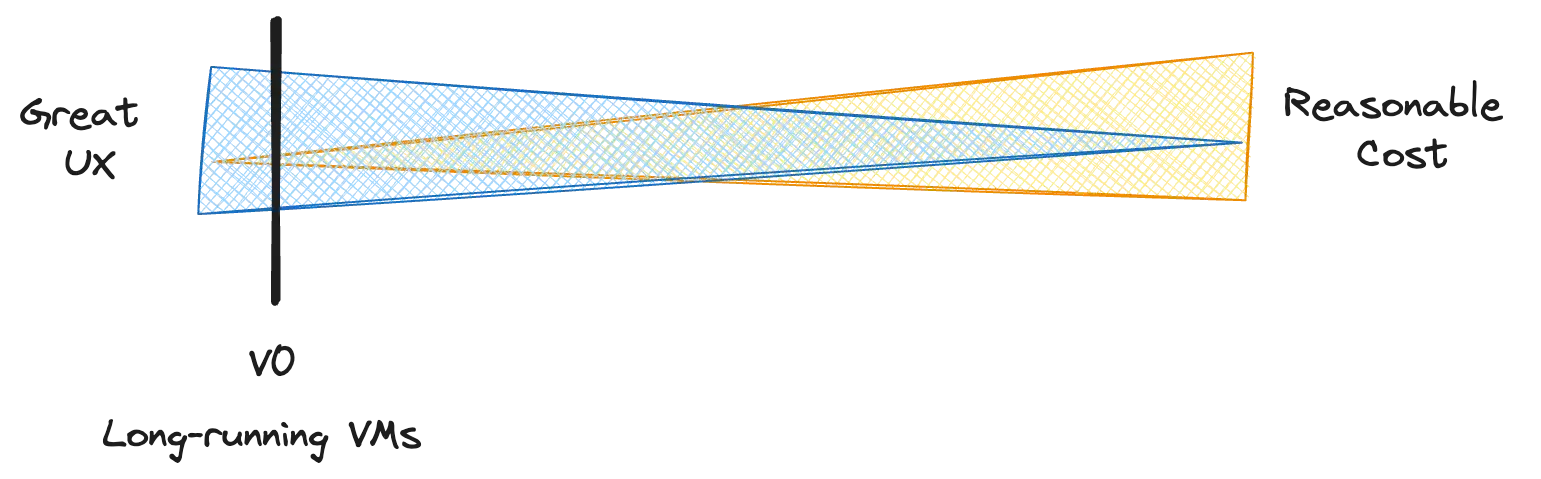

V0: The starting point: always-on VM-based CDEs

It makes sense to start simple - after all, KISS is a well known engineering principle for a reason. Initially, keeping it simple allows you to assess the benefits of CDEs for your company without committing to months-long implementation efforts. The KISS version of CDEs involves providing each developer with a long-running VM in the cloud (e.g. EC2), running open-VScode-server on the VM to develop remotely, and using .devcontainer json file to describe and standardize the development environment. Running in a container on a VM also has the benefit of your development environment more closely resembling your production environment. This solution will allow you to benefit from some of the core benefits of CDEs, namely equitable and consistent development environments. And notably, this architecture is how companies like Stripe, Slack and Uber got started on their journeys to building CDE solutions.

Side note: successfully rolling out developer tools is not trivial. This blog post outlines proven strategies for successful developer tool adoption.

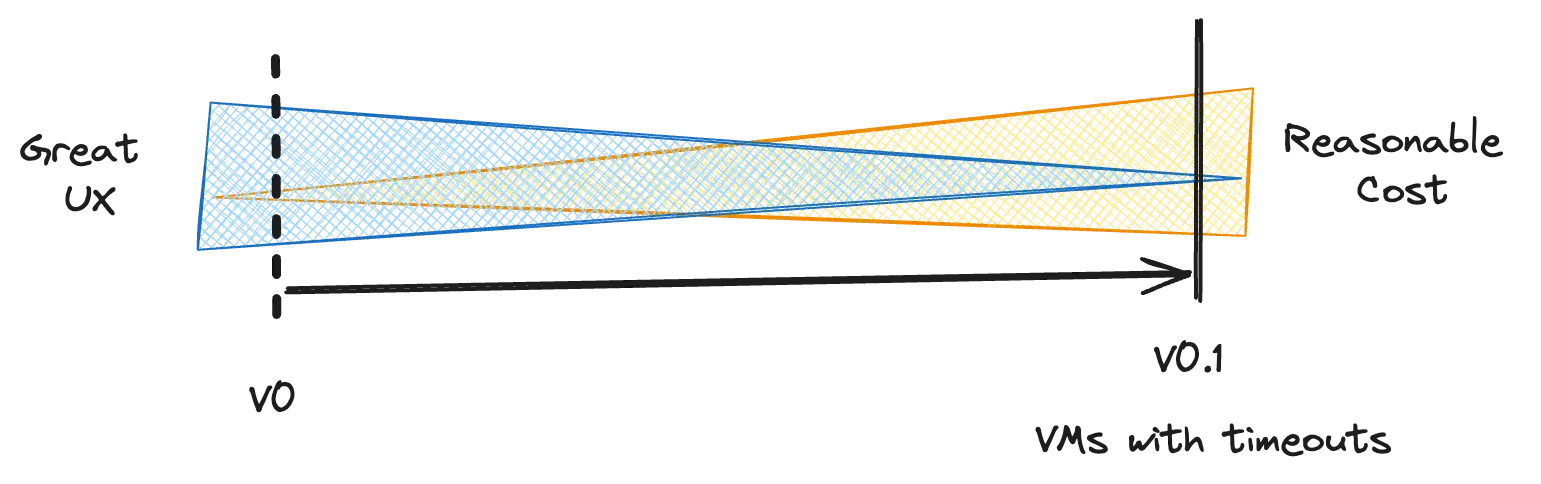

V0.1: Automatic shut down

The fact that the VMs are always on is good for user experience as it allows for quickly jumping in and out of existing development environments with almost no wait times. However, this architecture can become very costly, very quickly. For example, a comparatively small 8 core, 16GB RAM VM with a fast disk (useful because a lot of dev workloads are very I/O heavy) can cost around $300 per month at on-demand pricing. Multiplying this by 100 engineers, this costs $360,000 per year.

Once your CDE solution sees some adoption, it’s sensible to look for ways to reduce cost. The first obvious way is to shut down the VMs when not in use. To do this without losing an engineer’s work you need a reliable way to persist data from a development environment, enabling engineers to pick up where they left off. Allowing engineers to pick up where they left off not only means persisting any working directories where source code may be stored, but also (ideally) also any shell history, environment variables, (ideally) runtime state and more. Without these the developer needs to reconfigure every time they jump into a development environment.

There are several different storage options, ranging from using the instance storage of the VM to using networked storage (such as EBS or S3 on AWS) that can be detach and reattach to VMs, decoupling the VM from the development environment state. Decoupling storage from VMs increases flexibility, because you can attach the state of one VM to another - such as attaching the state of a “paused” development environment to a pooled or “warm” VM and thus increasing startup times (see below).

Another complex aspect is the shut down or timeout logic. When do you shut down a development environment? How do you know when an engineer is ‘done’ with their environment and not working late? There are several approaches here, ranging from hour of the day based shut downs, to user-defined timeouts at each startup, or even automatic inactivity-triggered timeouts. The choice depends on the context, however the latter is the most scalable and user-friendly.

Implementing automatic shutdown of development environments can lead to considerable savings. Assuming a development environment only runs for 10 hours each week day, the abovementioned cost of $360,000 per year would be reduced to ~$104,800 (50 hours per week 52 $0.4032 p/h * 100 engineers).

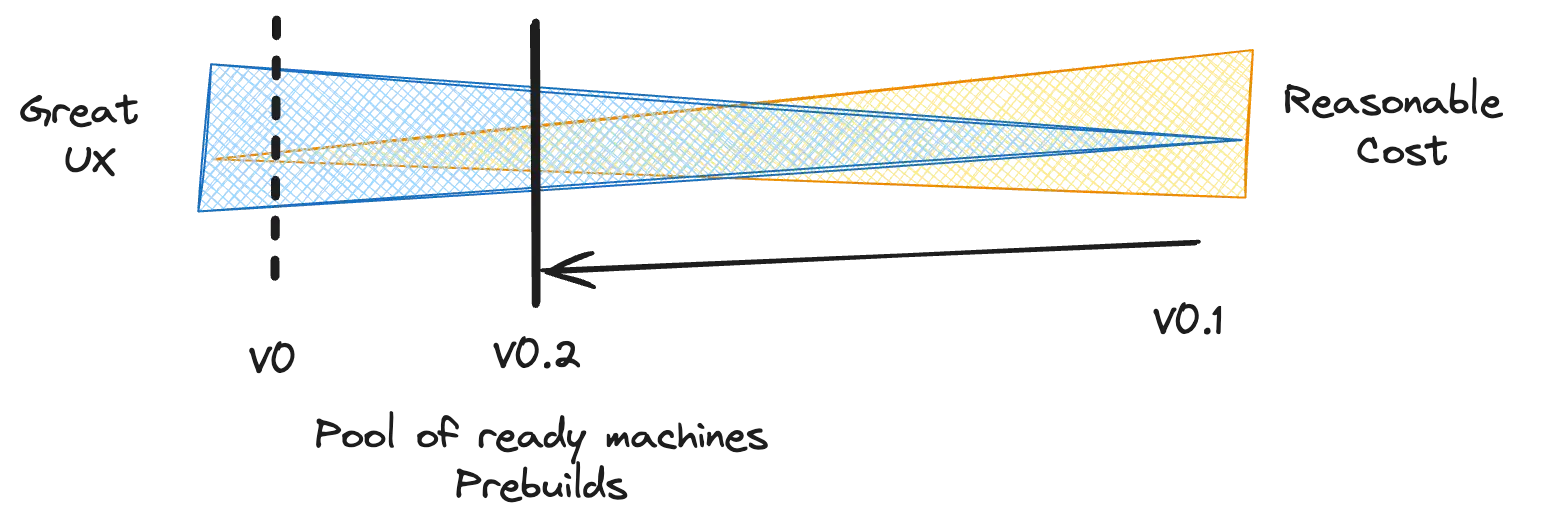

V0.2: Improving workspace startup times

This significant cost reduction must be balanced against its impact on user experience. After implementing VM shutdowns, engineers have to wait for an entire VM to spin up whenever they want to write a line of code - at the start of each workday, after timeouts, or when launching additional environments for tasks like PR reviews or testing new features. VM start up times can often take around 2 minutes. And once the VM itself is running, it needs to be setup for development: the devcontainer needs to be spun up and configured, a backup potentially downloaded and unzipped, source code checked out, development tools started, code built and more. If 100 engineers each start their development environment twice a day, and each startup takes 5 minutes, the total daily time lost is 1000 minutes. This is equivalent to over 16 hours, or more than two full 8-hour workdays. Considering an annual wage of $120,000 per engineer, this inefficiency costs around $240,000 a year - about the same as the amount saved by spinning down VMs above! This highlights that improving CDE startup times can be just as if not more effective in saving cost as reducing infrastructure cost. Outside of cost savings via increased productivity, a faster startup time is sure to delight users and keeps them from context switching while waiting.

Development environment startup times can be reduced in many ways. A sensible first step is to always have a pool of VMs ready to go that can be used to spin up a development environment. When an engineer requests an environment, they receive one from the pool. Then a development environment defined in the .devcontainer.json is started and another VM is spun up to keep the number of VMs in the pool constant. This requires you to build logic that manages this pooling. There is a clear trade off between user experience and cost here: increasing the size of the pool increases the chances that there will be a machine ready to go for every engineer, however this also increases the cost. Finding the right balance depends on how much you are willing to pay for a reduction in startup times.

While a pool of pre-warmed machines eliminates VM spin-up time, it doesn’t necessarily speed up the setup of development environments. Further, the tasks that are run when starting a development environment are likely the same for most engineers - meaning the same work is happening many times over. To counter this, it is reasonable to implement logic that prebuilds and preconfigures development environments on top of the pooled VMs. With this, startup times can be reduced to less than 1 minute (depending on the code base) and engineers can immediately start developing when they get their environment. Prebuilding also comes with cost versus UX considerations: you need to decide the frequency of prebuilding to incorporate main code changes efficiently. A balanced approach, like prebuilding every 10th commit, could be an effective solution.

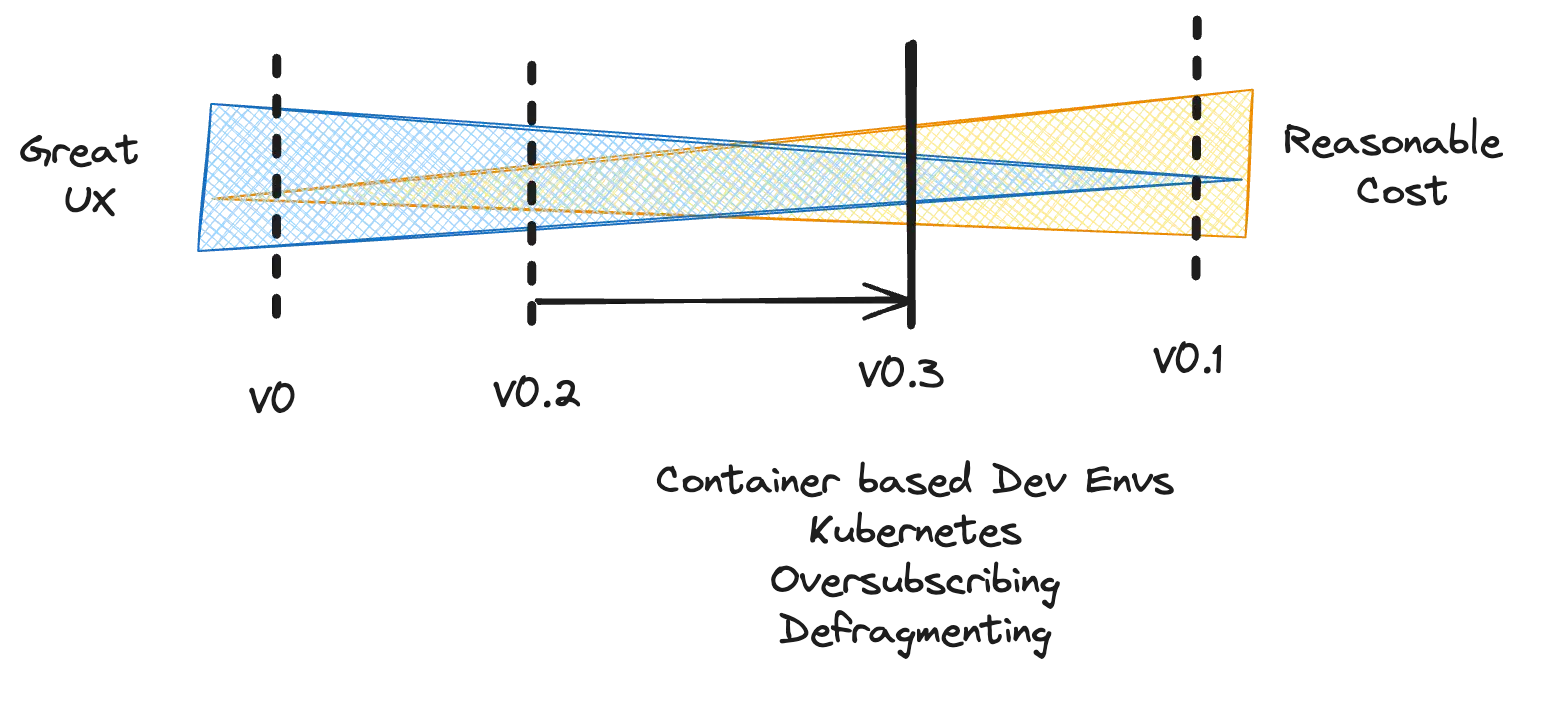

V0.3: Reducing cost even further:

Incorporating the user experience benefits above will unfortunately increase your costs again. For example, a pool of 10 8-core VMs running 10 hours per weekday costs ~$880 month or about $10,400 a year. This is on top of the ~$104,800 that it costs just to run the development environments during working hours. As such, it is sensible to again find more ways to reduce cost.

Engineers don’t always need 100% of the hardware resources of their development environment. Usually 100% of available resources are only needed when building code or running other compute intensive tasks like data science workloads. If several development environments are run on the same VM, chances are high that not every developer is using all of the hardware resources of their development environment at the same time. This means that we can try to add more development environments onto a VM than would theoretically fit (a.k.a. oversubscribing or overbooking). Doing this can further significantly reduce cost, as we can run more development environments with the same amount of resources. This can be done by running them in containers, and running several containers on each VM. Either via Docker or leveraging container orchestration solutions like Kubernetes (and in this case, achieving this by setting requests and limits appropriately).

To further optimize cost, it is important to shut down VMs when they are not in use. However, when running several environments on one machine, you need to wait until the last one shuts down before you can shut down the VM. This again can cause unnecessarily high costs. The solution would be to add the capability to shut down a dev environment on one machine and then spin it back up on another - effectively allowing the running development environments to be defragmented, and the utilization of VMs to be increased.

Depending on how quickly and smoothly this happens, it can impact the user experience and interrupt an engineer in their flow. Again, here is a tradeoff here between UX and cost.

Taking stock

Let’s take stock of how far we got: we created a system that allows developers to fairly quickly spin up an on-demand development environment at a manageable cost. There were many questions that had to be answered, revolving around storage content, timeout logic and duration, architecture choices (VMs versus containers), oversubscribing, prebuild frequency, and the size of the pool of instances that are kept ready. This just scratches the surface of things that need to be considered, but it gives a glimpse at how complex it is to build and operate a CDE solution in house. Some important considerations were not covered:

Observability: CDEs can quickly become business critical, and hence a good level of observability and alerting is essential.

Updates: Updates are also critical - when there is a patch for a vulnerability in an image being used, how do you make sure that all development environments are updated when they are running all the time? And how do you update them to avoid impacting users? Also, how do you update the infrastructure (e.g. new AMI) without impacting users?

Getting to a sensible spot regarding cost versus UX takes a lot of implementation effort. Just trying things out with a naive implementation is unlikely to create a system that can withstand the requirements of continuous use at reasonable cost. To short circuit this, it is practical to consider vendor-managed CDE products such as Gitpod. You can try Gitpod for free today whether it runs in your cloud account or Gitpod’s.

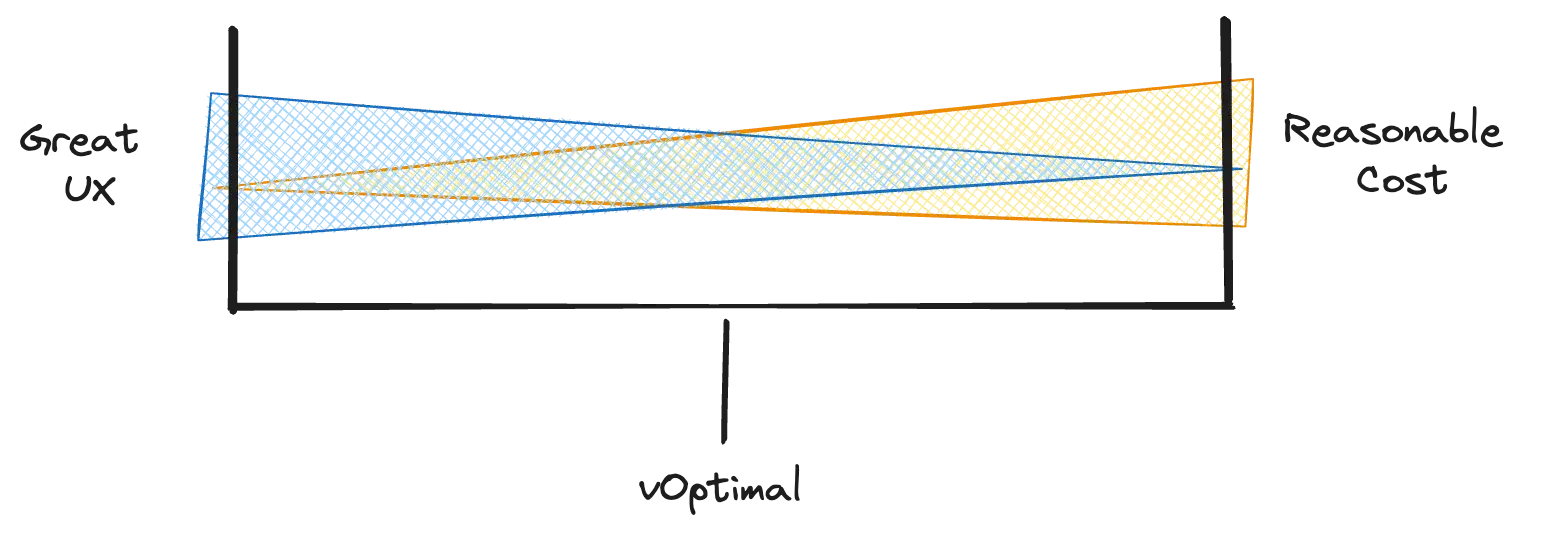

Rethinking the trade-offs

Here's a thought: what if this trade-off can be avoided altogether? Imagine having development environments that are available exactly when you need them and gone when you don’t, just like opening and closing a laptop, with all processes running precisely where you left them. And all of this without the cost of perpetually running instances and also with almost unlimited development environments.

That's exactly what we at Gitpod are working on right now. Stay tuned as we reveal more about our journey to rethink the cost vs. UX tradeoffs of CDEs over the next few months.